Last year, when the AI hype really exploded, the 'go to' library to build AI solutions in .NET at that time from Microsoft was Semantic Kernel. So although at that time still in preview, I started using Semantic Kernel and never looked back. Later Microsoft introduced Microsoft.Extensions.AI but I never had the time to take a good look at it.

Now I finally found some time to explore it further. My goal is to write a few posts in which I recreate an application that I originally created in Semantic Kernel to see how far we can get. But that will be mainly for the upcoming posts. In this post we focus on the basics to get started.

What is Microsoft.Extensions.AI?

Microsoft.Extensions.AI libraries provide a unified approach for representing generative AI components and enable seamless integration and interoperability with various AI services. Think of it as the dependency injection and logging abstractions you already know and love but specifically designed for AI services.

These libraries provide a unified layer of C# abstractions for interacting with AI services, such as small and large language models (SLMs and LLMs), embeddings, and middleware. Whether you're working with OpenAI, Azure OpenAI, or other AI providers, Microsoft.Extensions.AI gives you a consistent programming model that abstracts away the implementation details.

Remark: Although Microsoft.Extensions.AI was originally created as a separate library, Microsoft is actively working on integrating Microsoft.Extensions.AI with Semantic Kernel providing a consistent experience.

Getting started

To get started you will typically need at least these 2 NuGet packages:

dotnet add package Microsoft.Extensions.AI

dotnet add package Microsoft.Extensions.AI.Abstractions

Depending on how you want to interact with an LLM, you’ll need some extra packages. In our case we’ll start with a local language model through AI Foundry Local. Therefore, add the following NuGet package:

dotnet add package Microsoft.Extensions.AI.OpenAI

Remark: AI Foundry Local exposes an Open AI compatible API.

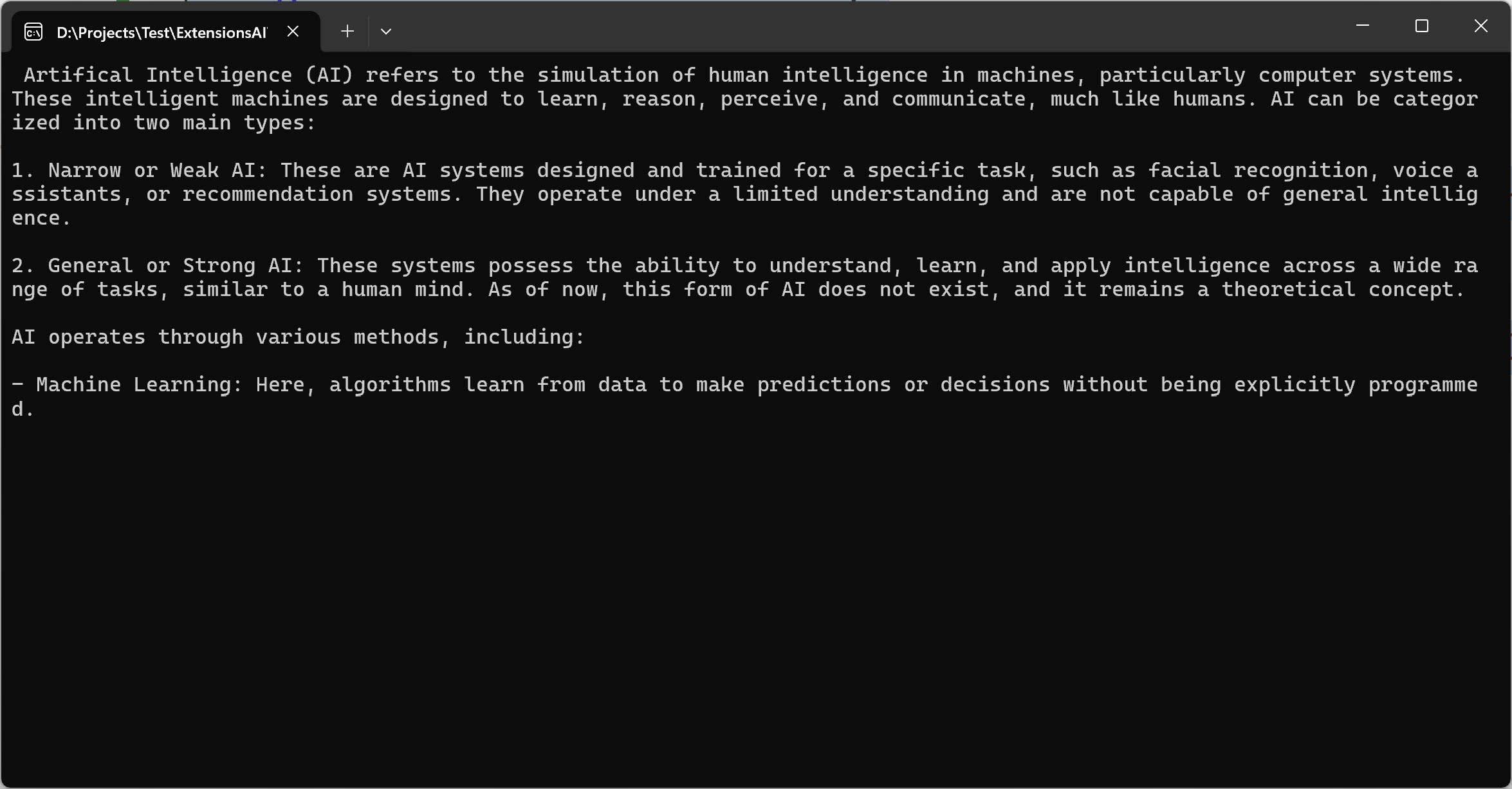

Now we can start writing some code. If you want to build a chat interface, you only need 2 lines of code:

Let’s start our AI Foundry local API:

foundry model run phi-3.5-mini

And run our app:

Great! That is a good starting point.

In a next post I’ll integrate Microsoft.Extensions.AI in an ASP.NET core application.

More information

Microsoft.Extensions.AI libraries - .NET | Microsoft Learn

Microsoft.Extensions.AI: Integrating AI into your .NET applications

Introducing Microsoft.Extensions.AI Preview - Unified AI Building Blocks for .NET - .NET Blog

Microsoft.Extensions.AI: Simplifying AI Integration for .NET Partners | Semantic Kernel