If you are following my blog you probably noticed that I'm experimenting a lot with Large Language models locally. I typically expose these LLM's locally through Ollama and use either Semantic Kernel or the API directly to test and interact with these models.

Recently I discovered OpenWebUI, an open source web interface designed primarily for interacting with AI language models. It offers a clean, intuitive interface that makes it easy to have conversations with AI models while providing advanced features for developers and power users.

Some of the key features of OpenWebUI are:

- OpenAI API Integration: Effortlessly integrate OpenAI-compatible APIs for versatile conversations alongside Ollama models.

-

Granular Permissions and User Groups: Create detailed user roles and permissions for a secure and customized user environment.

-

Full Markdown and LaTeX Support: Comprehensive Markdown and LaTeX capabilities for enriched interaction.

-

Model Builder: Easily create Ollama models directly from Open WebUI.

-

Local and Remote RAG Integration: Cutting-edge Retrieval Augmented Generation (RAG) technology within chats.

-

Web Search for RAG: Perform web searches and inject results directly into your chat experience.

-

Web Browsing Capabilities: Seamlessly integrate websites into your chat experience.

-

Image Generation Integration: Incorporate image generation capabilities using various APIs.

-

Concurrent Model Utilization: Engage with multiple models simultaneously for optimal responses.

-

Role-Based Access Control (RBAC): Ensure secure access with restricted permissions..

-

Pipelines Plugin Framework: Integrate custom logic and Python libraries into Open WebUI.

We’ll explore some of these features in later post, but today we focus on how to get started.

Getting started

The easiest way to get started with OpenWebUI is through Docker. So let’s run the following command to run OpenWebUI:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:cuda

Remark: There are multiple parameters that can be provided. Check out the readme file for more details.

After the container has started, you can browse to http://localhost:3000. The first time you run the container, you get a welcome page:

After clicking on Get started, you need to create an admin account:

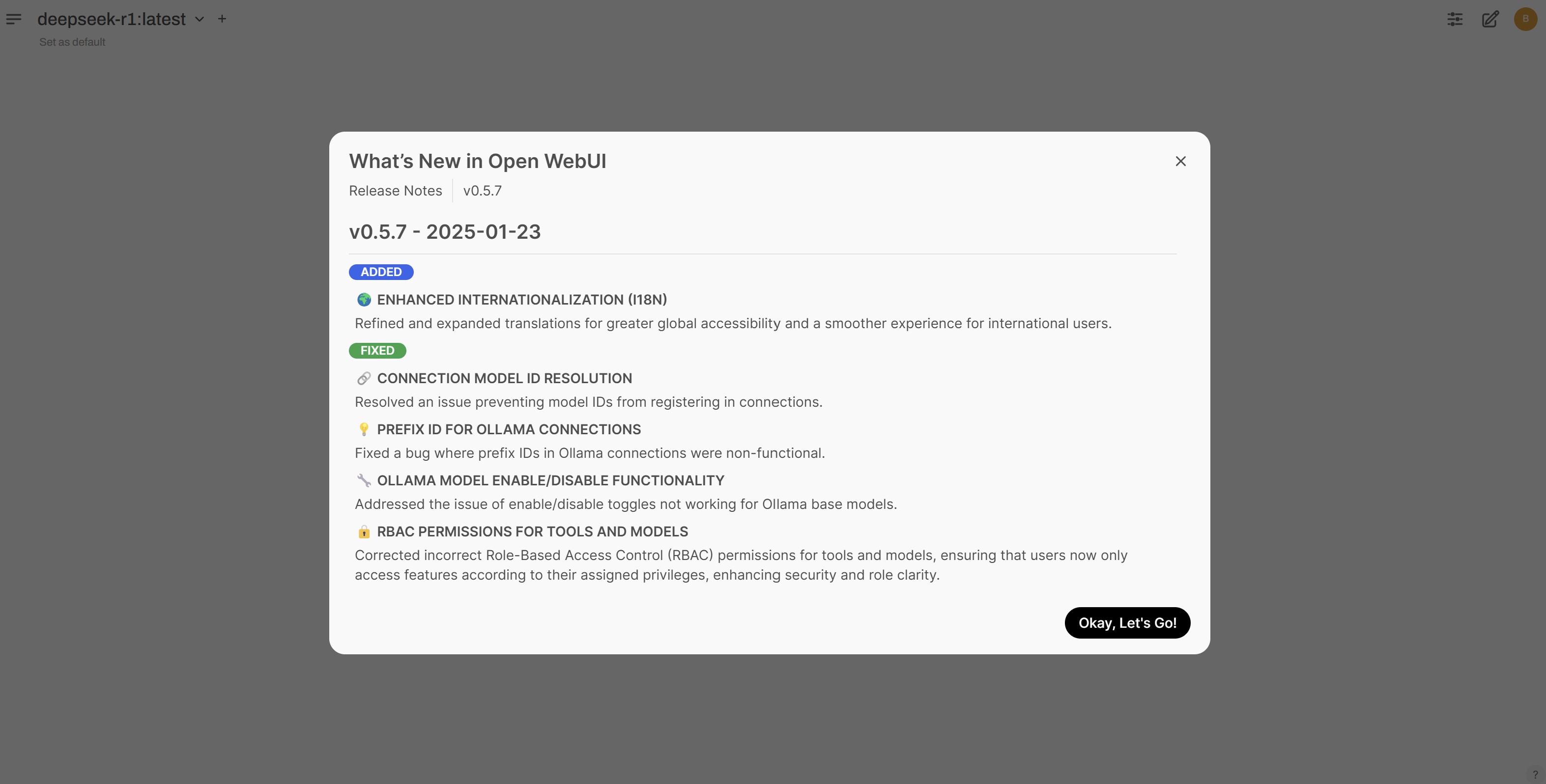

Now we arrive on the main OpenWebUI screen. If you used ChatGPT or Microsoft Copilot before, the experience feels familiar.

You can select a different model by clicking on the dropdown in the left corner:

Now we can send a message to the model and wait for some results:

The output is shown in the chat interface:

Configure OpenWebUI

There are a lot of things we can configure when using OpenWebUI. To access the admin settings, click on the user icon in the top right corner and choose Admin Panel:

On the Admin panel, you can further click on the Settings tab:

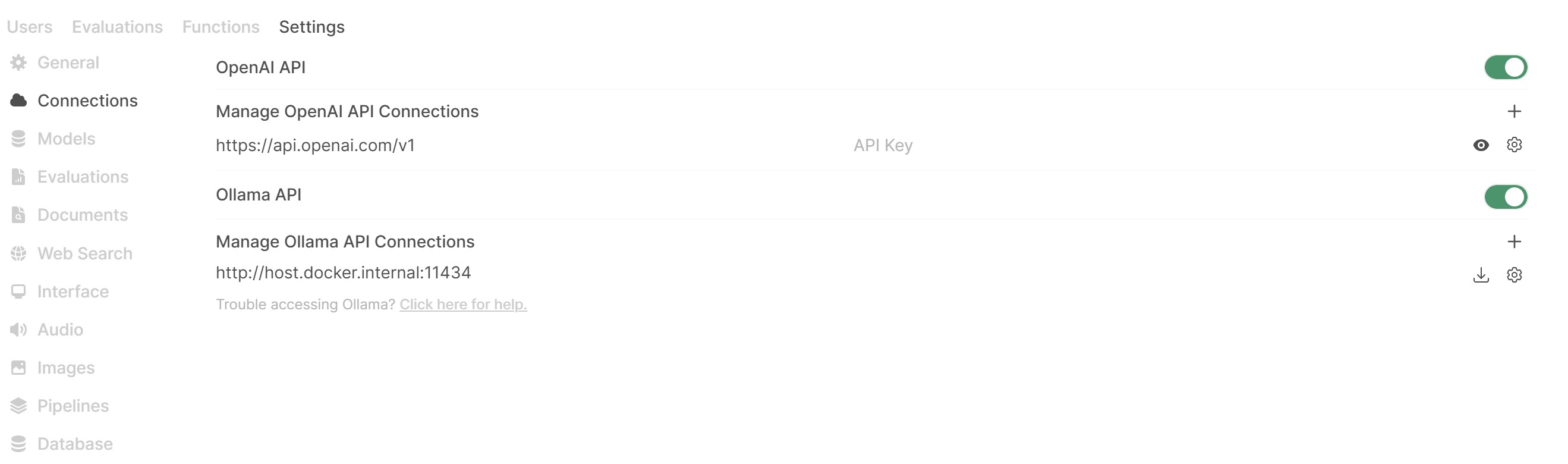

Now we get an overview of all available settings. For example in the connections section, we can see and manage the list of available connections:

By clicking on the configure icon next to a specific connection, we can configure it further:

Conclusion

More information

open-webui/open-webui: User-friendly AI Interface (Supports Ollama, OpenAI API, ...)