In Visual Studio 2013, the SQL Server Data Tools are available out-of-the-box. They give you a new project template, the Database project, that allows you to manage your database objects and scripts in a structured way.

In this post I want to explain how you can enable continuous integration and automatically deploy your database changes each time you check-in some code.

Preparation

Before we start make sure that you created a database project and added some database objects. On your TFS build agent, the SQL Server Data Tools should also be installed. If you are using Visual Studio Online(like I will do) together with a hosted Build Controller, the Data Tools are already installed.(Here is the full list of installed components: http://listofsoftwareontfshostedbuildserver.azurewebsites.net/)

Add a Publish Profile

Open Visual Studio and load your Database Project. Once Visual Studio is ready, right-click on your Database Project and click on Publish…

The Publish Database window is loaded. Click on Edit… to add a connection string to the database where you want the database project published to. Afterwards click Save Profile As… to save the Publish profile and add it to your Database Project.

As we don’t want to deploy immediately, you can click on Cancel to close the window after the Publish profile is saved.

Creating a new Build Definition

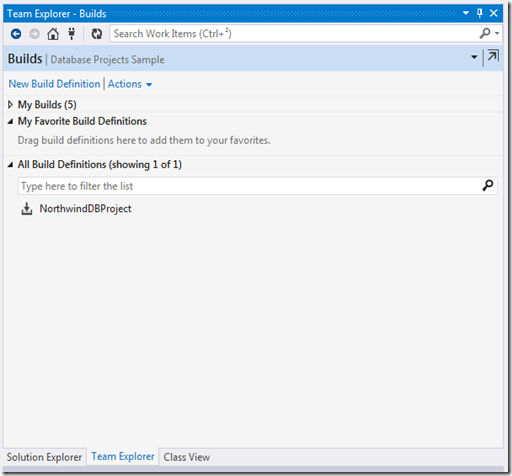

Now everything is configured correctly, it’s time to open up Team Explorer(View –> Team Explorer).

Go to the Builds tab to add a new Build Definition.

Specify a name for your Build and move on to the Trigger tab.

Set the Trigger type to Continuous Integration and jump directly to the Build Defaults tab.

On the Build Defaults tab, select the Build Controller where the SQL Server Data Tools are installed. In my case I’m using Visual Studio Online, so I choose the Hosted Build Controller. I also choose to copy the build results to a Drops folder inside source control. Let’s move on to the Process tab.

On the Process tab, open up the Advanced section and paste the following line in the MSBuild Arguments field:

/t:build /t:publish /p:SqlPublishProfilePath=NorthwindDBProject.publish.xml

Replace the SqlPublishProfilePath value with the name you choose for your Publish Profile in the Database Project.

Save the Build definition, you are done! There are a lot of other things, you can configure, but by default, this should be enough.

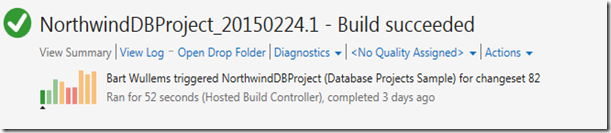

Check-in and see what happens…

As a last step, do some changes inside your database project, check them into TFS, and look how your database is rolled out for you…